使用python抓取App数据[通俗易懂]

Hi,大家好,我是编程小6,很荣幸遇见你,我把这些年在开发过程中遇到的问题或想法写出来,今天说一说使用python抓取App数据[通俗易懂],希望能够帮助你!!!。

![使用python抓取App数据[通俗易懂]_Python_第1张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第1张](./wp-content/uploads/2023/03/2023032613475569-150x93.jpg)

App中的数据可以用网络爬虫抓取么

答案是完全肯定的:凡是可以看到的APP数据都可以抓取。

下面我就介绍下自己的学习经验和一些方法吧 本篇适合有过web爬虫基础的程序猿看

没有的的话学的可能会吃力一些

App接口爬取数据过程

- 使用抓包工具

- 手机使用代理,app所有请求通过抓包工具

- 获得接口,分析接口

- 反编译apk获取key

- 突破反爬限制

需要的工具:

- 夜神模拟器

- Fiddler

- Pycharm

实现过程

首先下载夜神模拟器模拟手机也可以用真机,然后下载Fiddler抓取手机APP数据包,分析接口完成以后使用Python实现爬虫程序

Fiddler安装配置过程

第一步:下载神器Fiddler

Fiddler下载完成之后,傻瓜式的安装一下!

第二步:设置Fiddler

打开Fiddler, Tools-> Fiddler Options (配置完后记得要重启Fiddler)

选中"Decrpt HTTPS traffic", Fiddler就可以截获HTTPS请求

选中"Allow remote computers to connect". 是允许别的机器把HTTP/HTTPS请求发送到Fiddler上来

记住这个端口号是:8888

夜神模拟器安装配置过程

######第一步:下载安装

夜神模拟器下载完成之后,傻瓜式的安装一下!

######第二步:配置桥接 实现互通

首先将当前手机网络桥接到本电脑网络 实现互通

安装完成桥接驱动后配置IP地址,要配成和本机互通的网段,配置完成后打开主机cmd终端ping通ok

第三步:配置代理

- 打开主机cmd

- 输入ipconfig查看本机IP

- 配置代理

进入夜神模拟器–打开设置–打开WLAN

点击修改网络–配置代理 如下图:

配置完后保存

到这里我们就设置好所有的值,下面就来测试一下,打开手机的超级课程表APP

- 在夜神模拟器上下载你想爬取得App使用Fiddler抓包分析api后使用python进行爬取就可以了

####爬取充电网APP实例

爬取部分内容截图:

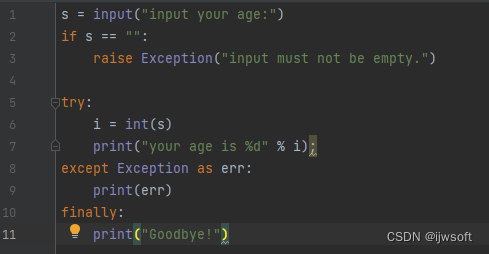

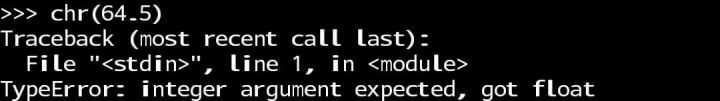

部分python代码分享:

import requests

import city

import json

import jsonpath

import re

city_list = city.jsons

tags_list = city.Tag

def city_func(city_id):

try:

city = jsonpath.jsonpath(city_list, '$..sub[?(@.code=={})]'.format(int(city_id)))[0]["name"]

except:

city = jsonpath.jsonpath(city_list, '$[?(@.code=={})]'.format(int(city_id)))[0]["name"]

return city

def tags_func(tags_id):

tags_join = []

if tags_id:

for tags in tags_id:

t = jsonpath.jsonpath(tags_list,'$..spotFilterTags[?(@.id=={})]'.format(int(tags)))

tags_join.append(t[0]["title"])

return ('-'.join(tags_join))

def split_n(ags):

return re.sub('\n',' ',ags)

def request(page):

print('开始下载第%d页'%page)

url = 'https://app-api.chargerlink.com/spot/searchSpot'

two_url = "https://app-api.chargerlink.com/spot/getSpotDetail?spotId={d}"

head = {

"device": "client=android&cityName=%E5%8C%97%E4%BA%AC%E5%B8%82&cityCode=110106&lng=116.32154281224254&device_id=8A261C9D60ACEBDED7CD3706C92DD68E&ver=3.7.7&lat=39.895024107858724&network=WIFI&os_version=19",

"appId": "20171010",

"timestamp": "1532342711477",

"signature": "36daaa33e7b0d5d29ac9c64a2ce6c4cf",

"forcecheck": "1",

"Content-Type": "application/x-www-form-urlencoded",

"Content-Length": "68",

"Host": "app-api.chargerlink.com",

"Connection": "Keep-Alive",

"User-Agent": "okhttp/3.2.0"

}

data = {

"userFilter[operateType]": 2,

"cityCode": 110000,

"sort": 1,

"page": page,

"limit": 10,

}

response = requests.post(url,data=data,headers=head)

#获取数据

data = response.json()

for i in data['data']:

c = []

id = i['id']

name = i["name"] #充电桩名

phone = i["phone"] #手机号

num = i['quantity'] #有几个充电桩

city = city_func(i["provinceCode"]) #城市

tags =tags_func(i["tags"].split(','))#标签

message = c + [id,name,phone,num,city,tags]

parse_info(two_url.format(d=id),message)

def parse_info(url,message):

#打开文件

with open('car.csv','a',encoding='utf-8')as c:

head = {

"device": "client=android&cityName=&cityCode=&lng=116.32154281224254&device_id=8A261C9D60ACEBDED7CD3706C92DD68E&ver=3.7.7&lat=39.895024107858724&network=WIFI&os_version=19",

"TOKEN": "036c8e24266c9089db50899287a99e65dc3bf95f",

"appId": "20171010",

"timestamp": "1532357165598",

"signature": "734ecec249f86193d6e54449ec5e8ff6",

"forcecheck": "1",

"Host": "app-api.chargerlink.com",

"Connection": "Keep-Alive",

"User-Agent": "okhttp/3.2.0",

}

#发起详情请求

res = requests.get(url,headers=head)

price = split_n(jsonpath.jsonpath(json.loads(res.text),'$..chargingFeeDesc')[0]) #价钱

payType = jsonpath.jsonpath(json.loads(res.text),'$..payTypeDesc')[0] #支付方式

businessTime =split_n(jsonpath.jsonpath(json.loads(res.text),'$..businessTime')[0]) #营业时间

result = (message + [price,payType,businessTime])

r = ','.join([str(i) for i in result])+',\n'

c.write(r)

def get_page():

url = 'https://app-api.chargerlink.com/spot/searchSpot'

head = {

"device": "client=android&cityName=%E5%8C%97%E4%BA%AC%E5%B8%82&cityCode=110106&lng=116.32154281224254&device_id=8A261C9D60ACEBDED7CD3706C92DD68E&ver=3.7.7&lat=39.895024107858724&network=WIFI&os_version=19",

"appId": "20171010",

"timestamp": "1532342711477",

"signature": "36daaa33e7b0d5d29ac9c64a2ce6c4cf",

"forcecheck": "1",

"Content-Type": "application/x-www-form-urlencoded",

"Content-Length": "68",

"Host": "app-api.chargerlink.com",

"Connection": "Keep-Alive",

"User-Agent": "okhttp/3.2.0"

}

data = {

"userFilter[operateType]": 2,

"cityCode": 110000,

"sort": 1,

"page": 1,

"limit": 10,

}

response = requests.post(url, data=data, headers=head)

# 获取数据

data = response.json()

total = (data["pager"]["total"])

page_Size = (data["pager"]["pageSize"])

totalPage = (data['pager']["totalPage"])

print('当前共有{total}个充电桩,每页展示{page_Size}个,共{totalPage}页'.format(total=total,page_Size=page_Size,totalPage=totalPage))

if __name__ == '__main__':

get_page()

start = int(input("亲,请输入您要获取的开始页:"))

end = int(input("亲,请输入您要获取的结束页:"))

for i in range(start,end+1):

request(i)

总结:

app里的数据比web端更容易抓取,反爬虫也没拿么强,大部分也都是http/https协议,返回的数据类型大多数为json

联系v:17610352720(收费)

![使用python抓取App数据[通俗易懂]_Python_第2张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第2张](https://img-blog.csdn.net/20180724094526650)

![使用python抓取App数据[通俗易懂]_Python_第3张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第3张](https://img-blog.csdn.net/20180724094613198)

![使用python抓取App数据[通俗易懂]_Python_第4张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第4张](https://img-blog.csdn.net/20180724095022526)

![使用python抓取App数据[通俗易懂]_Python_第5张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第5张](https://img-blog.csdn.net/20180724095211491)

![使用python抓取App数据[通俗易懂]_Python_第6张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第6张](https://img-blog.csdn.net/20180724095734441)

![使用python抓取App数据[通俗易懂]_Python_第7张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第7张](https://img-blog.csdn.net/20180724095922805)

![使用python抓取App数据[通俗易懂]_Python_第8张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第8张](https://img-blog.csdn.net/20180724103341973)

![使用python抓取App数据[通俗易懂]_Python_第9张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第9张](https://img-blog.csdn.net/20180724103520937)

![使用python抓取App数据[通俗易懂]_Python_第10张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第10张](https://img-blog.csdn.net/20180724124506490)

![使用python抓取App数据[通俗易懂]_Python_第11张_编程好6博客 使用python抓取App数据[通俗易懂]_https://bianchenghao6.com/blog_Python_第11张](https://img-blog.csdn.net/20180724103948909)